Juice, Errr, Ohh…..

Background

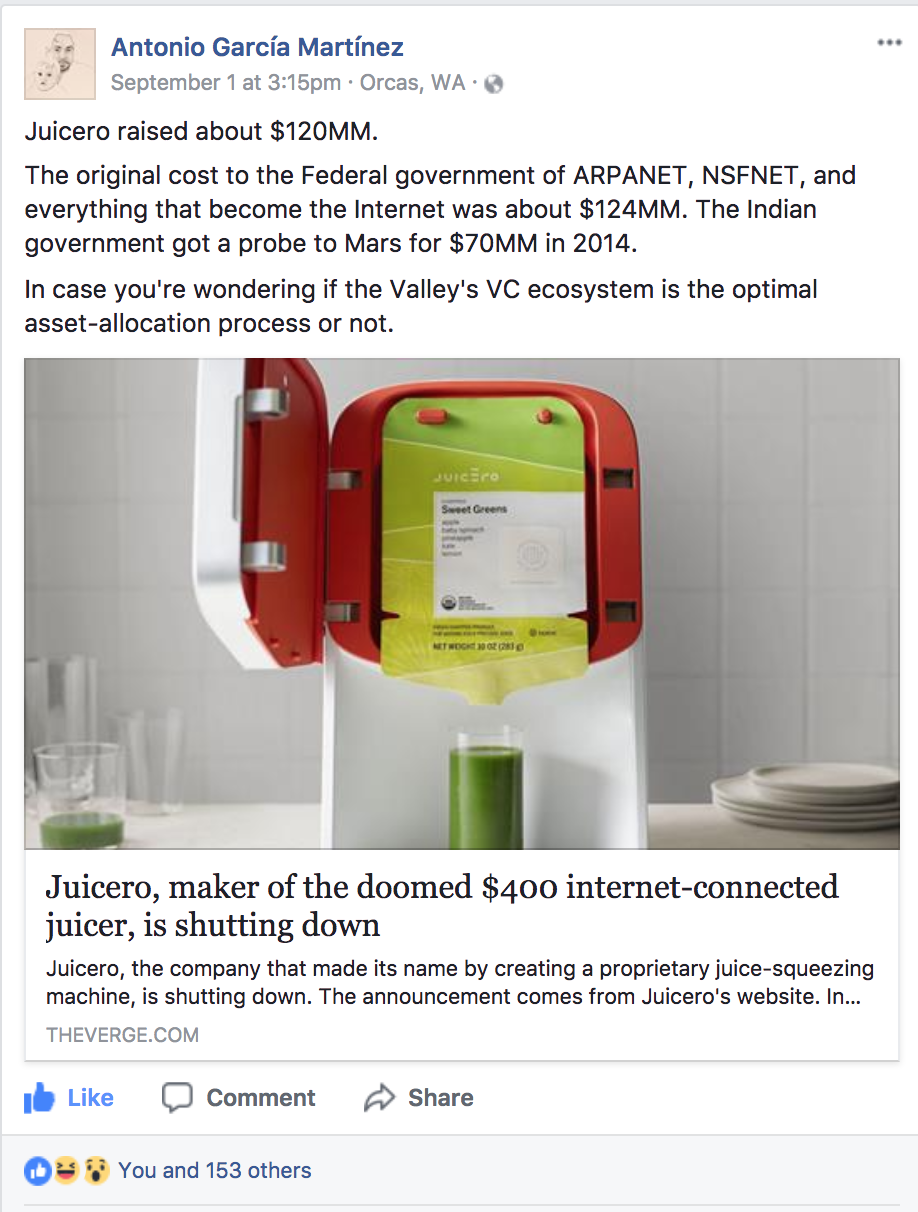

On September 1st, 2017 I was scrolling through my Facebook feed when I encountered this post from Chaos Monkeys author Antonio Garcia-Martinez.

Soon thereafter, a spark was lit that got me thinking about creating this 2 part blog post each achieving something I’d intended to explore in a public post.

The story of Juicero, a soon-to-be defunct venture funded cold-pressed juicing company, provides an ideal canvas to demonstrate how one can combine 2 of my packages fundManageR and gdeltr2 to paint a picture of what occurred, who was associated with the company, the amount of capital raised by the company and how the media has portrayed recent events. This will be part 1 of the post.

Antonio and Juicero inspired me to set out to do something I had been thinking about trying to figure out how to do but hadn’t and that is:

Use machine learning to generate fake start ups

We will explore this in part 2 of the post.

Introspective on my venture investing experience.

Before we get into the meat of this post Juicero’s story reminded me of a major lesson I learned early in my seed/venture investing days, it was a lesson I was made aware of years before but ignored.

Peter Linneman’s Real Estate 209 message

One of the most memorable lessons I took from college was something my professor Peter Linneman said on the first day of his class to a room full of eager, self-confident, and somewhat arrogant Wharton undergraduates back in September of 2004.

If you want to try to understand most Americans you need to stop reading The New York Times and Wall Street Journal.

His point was simple yet powerful.

Those of us “coastal elites” who obsess over the day-to-day come-up-ins of something like financial world, don’t forget, most of the rest of the people in the United States don’t care and it isn’t because they are stupid it is because whatever it is probably isn’t that important.

If you set out to build to service the needs of the masses don’t just blindly assume they care about all the things you and your network care about.

Peter’s message was, and still is an important one. Don’t take yourself too seriously and don’t think that your fancy education makes you qualified to know what is best for everyone or what will be successful.

Over the years Peter has grown to be one of my most respected mentors and overall favorite people. I often reflect on his fantastic Life Lessons and it all started with that lesson from his first day of class.

Peter’s expertise is in real estate finance and that message was in the context of our class but I found what he had to say has major power in the world of venture investing. In fact, I have found some of the most extreme cliquish mentality among some in the most prominent and powerful roles in Silicon Valley.

When investing in an venture funded idea, be it a physical product like Juicero or a software platform and one of my favorite seed personal investments OpenGov there are 2 key variables to think about in terms of the market for the idea.

- Scalability

- Adoption

Is the product or technology something that appeals to people not just in Los Angeles or Brooklyn but in Cambria Indiana or Waterbury Connecticut?

Do enough people in this country or world REALLY need whatever the pitch is to compensate me for my risk? One needs to really think about these questions as they diligence a pitch.

I learned this lesson the hard-way on some of my earliest investments where I didn’t think long and hard enough about those questions and funded ideas that tried to solve “coastal only” problems.

Needless to say those investments didn’t pan out but the taught me a good lesson, something I’d already been taught by one of my favorite teachers, ask yourself how someone very different from you benefits from the idea seeking funding and no matter how much may be convinced the world needs whatever the idea being pitched is, try playing devil’s advocate for a bit and see how you come out.

I am not assuming that investors in Juicero didn’t do these things but my guess is that if some had followed this advice a bit more they would have avoided what will likely be a 100% loss on this investment.

Now lets get in to the meat of this tutorial.

Part 1: Peeling back the Juicero onion

In this section we are going to demonstrate how to use fundManageR to explore Juicero’s finances and entities that may have exposure to their likely bankruptcy.

After that we are going to use gdeltr2 to see what the media has been saying about the recent events at Juicero.

Juicero’s verifiable finances

One of the things I learned quickly as someone who has been a party to some complicated transactions that garnered serious media attention, writers rarely get the numbers right and often don’t verify their claims with a reputable clearinghouse. Finance can be a bit complicated and more often than not a number will get out there for whatever reason and it will stick even if it has no validity.

One of the characteristics of a good writer and of course investor is to do the dirty work needed to find out a verifiable truth. Many of my public packages try to make that a bit easier, functional and automated.

One of the many the many features integrated into fundmanageR to assist in this is a full wrapper of nearly all the data available through the Securities and Exchange Commission, including EDGAR.

Often times when an entity raises money they have to file what is called a Form D containing a bunch information about the exempt securities offering.

This gives us an ability to confirm certain amounts of actually raised funding. These forms are often not completely accurate reflections of how much funding was raised when all is said and done. There are also often time lags between funding close and form filing.

So did Juicero really raise $120,000,000???

Maybe, but I am extremely skeptical and fundManageR can help us better understand this question.

Before we explore this, you need to make sure you have the packages we are about to use installed and loaded.

devtools::install_github("abresler/fundManageR")

library(fundManageR)

library(dplyr) # install.packages('dplyr') if you don't have it

library(highcharter) # install.packages('highcharter') if you don't have itJuicero’s verified capital raises

Now we are ready to use fundManageR’s EDGAR wrapper to explore and visualize the data related to Juicero’s verified capital raises.

The function get_data_sec_filer will allow us to explore the data.

df_juicero <- fundManageR::get_data_sec_filer(entity_names = "Juicero", parse_form_d = TRUE)Once the function is complete you will notice a number of data frames in your environment containing the information parsed from EDGAR.

You can explore the individual data frames if you wish but for now we want to focus on the dataFilerFormDsOfferingSalesAmounts data which contains information about the amount of capital raised in each filed Form D.

You will notice there are 2 entities that matched our search termJuicero.

We want to focus on the Juicero corporate entity whose CIK number is 1588182. The other entity appears to be a sidecar that may be affiliated with a Juicero financing round but we aren’t sure so we should exclude that.

After we select only the Juciero corporate entity we need to tidy the data a bit so we can focus on the amount of capital solicited versus the amount of capital raised. W can then take that and interactively explore the end result.

library(dplyr) # install.packages(dplyr)

library(tidyr) # install.packages(tidyr)

library(highcharter) # install.packages("highcharter")

## Filter and Munge

df_juicero_d <-

dataFilerFormDsOfferingSalesAmounts %>%

filter(idCIK == 1588182) %>%

select(dateFiling, amountSoldTotal, amountOfferingTotal) %>%

gather(item, value, -dateFiling) %>%

mutate(item = item %>% as.factor())

df_juicero_d %>%

hchart(type = "area", hcaes(x = dateFiling, y = value, group = item)) %>%

hc_tooltip(sort = TRUE, table = TRUE) %>%

hc_tooltip(split = TRUE) %>%

hc_add_theme(hc_theme_smpl()) %>%

hc_title(text = "Juicero Inc Form D Verified Capital Raises",

useHTML = TRUE) %>%

hc_subtitle(

text = "Data from the SEC via fundManageR",

useHTML = TRUE

) %>%

hc_plotOptions(series = list(

marker = list(enabled = FALSE),

cursor = "pointer",

states = list(hover = list(enabled = FALSE)),

stickyTracking = TRUE

)) %>%

hc_xAxis(

type = "datetime",

gridLineWidth = 0,

lineWidth = 1,

lineColor = "black",

tickWidth = 1,

tickAmount = 15,

tickColor = "black",

title = list(text = "Filing Date", style = list(color = "black")),

labels = list(style = list(color = "black"))

)What can we take from this?

There is no way for us tto confirm that Juicero actually raised $120,000,000.

In fact we can only be sure that they raised $19,881,830.

That said, absence of evidence does not mean definite absence. There are forms of capital that often go undisclosed to the SEC and it is possible that Juicero raised the amount of money we cannot account for via those means. That said, I find it highly unlikely that Juicero really raised $120,000,000 and if I were an editor at a media entity I would not use that information based upon the facts that we have at this point.

It turns out we maybe able to fill in the missing alleged money another way also using fundManageR and that is by searching through the text of the last 3 years of every EDGAR filing.

EDGAR full text search

The get_data_edgar_ft_terms does this for us.

Let’s see what searching for Juicero turns up.

df_juicero_ft <- fundManageR::get_data_edgar_ft_terms(search_terms = "\"Juicero\"",

include_counts = FALSE)Let’s pare down the variables and take a look at the results.

library(DT) # install.packages("formattable")

library(glue) # install.packages("glue")##

## Attaching package: 'glue'## The following object is masked from 'package:dplyr':

##

## collapsedf_juicero_ft %>%

select(dateFiling:urlSECFiling) %>%

select(-countFilings) %>%

mutate(urlSECFiling = glue::glue("<a href = '{urlSECFiling}' target = '_blank'>Filing</a>")) %>%

group_by(idCIKFiler, dateFiling) %>%

arrange(desc(dateFiling)) %>%

datatable(filter = 'top', options = list(

pageLength = 5, autoWidth = TRUE

), escape = FALSE)What does this tell us?

Our full text search unearthed some valuable information:

- Campbell’s Soup invested in $13,500,000 in Juicero and a high level employee left to become CEO of Juicero

- Juicero licensed important technology from another public company, Landec Corporation

- A commercial mortgage was securitized with a Juicero rent comparable

- Juicero’s equity was placed in a couple of sidecars including at Next Play Capital and A-List ventures, an Angelist syndicate.

None of this information confirms Juicero has actually raised $120,000,000.

The new Campbell Soup disclosure and the sidecars formed in 2016 do lead me to believe the amount of funding Juicero raised is likely higher than the confirmed amount from the Form Ds but we cannot be absolutely sure.

Going these functions may be useful assisting us in monitoring any new developments as Juicero is dissolved.

It is possible that upon an actual bankruptcy, Campbell’s Soup will be forced to file information with the SEC that details what happened to their investment but we will have to just wait and see.

GDELT, what happend at Juicero?

It may be some time until we get an accurate depiction of what caused the demise of Juicero but it is still worthwhile to sift through the entire media universe to find stories about Juicero for us to explore.

To do this we will use the gdeltr2 package. For those of you new to gdeltr2 I recommend you take a look at my last blog post which walks you through an in depth tutorial of the package and the functions we are about to use to explore this topic.

Juicero Stories

The first thing we are going to do is find all the articles that refer to Juicero from the last 12 weeks. In addition to that we will take advantage of Google Cloudvision integration and also look for any photo that contains the text Juicero.

Also in order to circumvent the 250 result API limit per call we will refer directly to each day over the last 12 weeks to generate an API call for each day using the gdeltr2::generate_dates function.

This will create an interactive display with all the matched stories for us to explore.

library(gdeltr2) # devtools::install_githu('abresler/gdeltr2') if you need the package

dates <- gdeltr2::generate_dates(start_date = Sys.Date() - 84, end_date = Sys.Date(),

time_interval = "days")

gdeltr2::get_data_ft_v2_api(terms = c("\"Juicero\""), images_ocr = c("Juicero"),

dates = dates, timespans = NA)Timeline

Next we will generate an interactive showcasing the volume of stories matching our 2 Juicero search parameters. This will help us identify when the media picked up on things going bad at the company.

gdeltr2::get_data_ft_v2_api(terms = c("\"Juicero\""), images_ocr = c("Juicero"),

modes = "timelinevolinfo", timespans = "12 Weeks")Wordcloud

Finally we will generate wordclouds of the parsed text over a 2 and 12 week time span to allow us to quickly explore the words most associated with the Juicero stories.

gdeltr2::get_data_ft_v2_api(terms = c("\"Juicero\""), images_ocr = c("Juicero"),

modes = c("WordCloudEnglish"), trelliscope_parameters = list(rows = 1, columns = 1),

timespans = c("12 weeks", "2 weeks"))Wrapping up Part 1

This wraps up our direct exploration of Juicero. I hope it demonstrated how to use each of my packages to unearth meaningful information about an entity and that it showcased how easily you can process large volumes of information to try to get at potential truths.

In the next section will transition into something completely different but truly the most fun and exciting thing I have done in my programming adventures and that is using machine learning to generate fake start-ups.

Part 2 – Generating fake start-ups with machine learning

Juicero’s story reminded me of I question I often ask myself upon hearing that a company whose product/business I don’t understand raises a boat-load of venture money.

Could a monkey throwing a dart at a start-up business plan dartboard come up with a better idea?

That got me thinking, instead of a monkey how about a computer and how could I use my Angus McGuyver skills to actually do this??

After thinking for a second I remembered that one of the many data-stores fundManager wraps is Y Combinator [YC], the world’s leading start-up incubator.

YC has a public API containing information about the over 1300 companies that have graduate YC. This API includes a company name and elevator pitch style description. The perfect inputs to build a machine learning model to generate fake start-ups complete with their own name and description.

There are a number of different ways to use a computer to achieve my goal but I ultimately settled on using 2 fairly advanced machine learning methods.

A neural network for the name generator and a hidden Markov model for the descriptions.

For each method we are going to build a generalized function that will take in text and other parameters to produce machine learning driven text. This will make it easy for us to re-use the functions for other text exploration adventures.

Step 0 – Pre-requisites

In addition to R you need Python 2 installed along with the Tensorflow, Keras and Markovify modules.

You can follow the Keras/Tensorflow installation instructions here

To install Markovify you can run this code inside of R

system("pip install markovify")Once this is completed we get into turning these ideas into code.

Step 1 – Load the packages

Now we are ready to load the packages needed to build, run and explore our functions.

It is important that you install the packages I am using if you don’t already have them.

package_to_load <- c("fundManageR", "purrr", "stringr", "dplyr", "rlang", "reticulate",

"keras", "tokenizers", "tidyr", "glue", "DT", "formattable")

lapply(package_to_load, library, character.only = T)Step 2 – Refine the YC data

Before we get started on the machine learning lets spend a few moments exploring and further refining the YC data.

To acquire YC alumni we use fundManageR:::get_data_ycombinator_alumni function.

Lets bring in this data.

df_yc <- fundManageR::get_data_ycombinator_alumni()

Let’s explore the structure of this data to see if we can come up with some additional filters.

df_yc %>%

glimpse()## Observations: 1,320

## Variables: 9

## $ nameCompany <chr> "COCUSOCIAL", "WILDFIRE", "HOTELFLEX", "ONC...

## $ isCompanyDead <lgl> FALSE, FALSE, FALSE, FALSE, FALSE, FALSE, F...

## $ isCompanyAcquired <lgl> FALSE, FALSE, FALSE, FALSE, FALSE, FALSE, N...

## $ batchYC <chr> "s2017", "s2017", "s2017", "s2017", "s2017"...

## $ yearYC <dbl> 2017, 2017, 2017, 2017, 2017, 2017, 2017, 2...

## $ nameSeasonYC <chr> "SUMMER", "SUMMER", "SUMMER", "SUMMER", "SU...

## $ urlCompany <chr> "http://www.cocusocial.com", "https://www.w...

## $ verticalCompany <chr> "Marketplace", NA, "B2B", NA, "Education", ...

## $ descriptionCompany <chr> "Marketplace for local activities.", "Wildf...Thinking a step ahead towards the machine learning we are about to do it should be useful to really refine the YC data to allow us to generate machine learning output for specific company verticals, seasons, years, and so on.

To do this we will build a function that refines the YC alumni data for the parameters mentioned above in addition to a few other parameters and data cleaning tasks.

The function takes a data frame containing the YC alumni and returns the filtered results you specify.

filter_yc_alumni <-

function(data,

years = NULL,

verticals = NULL,

season = NULL,

exclude_acquisitions = TRUE,

only_defunct_companies = FALSE) {

## remove acronyms

data <-

data %>%

separate(nameCompany, sep = '\\(|\\/', c("nameCompany", "acronymCompany")) %>%

mutate(acronymCompany = acronymCompany %>% str_replace_all('\\)', '')) %>%

mutate_if(is.character,

str_trim)

## make descriptions all upper case

data_ycombinator <-

data %>%

filter(!is.na(descriptionCompany)) %>%

mutate_at(.vars = c("descriptionCompany"),

.funs = str_to_upper)

## Clean the descriptions

data_ycombinator <-

1:nrow(data_ycombinator) %>%

map_df(function(x) {

df_row <-

data_ycombinator %>% slice(x)

company <-

df_row$nameCompany

remove_text <-

glue::glue("{company} IS")

df_row %>%

mutate(

descriptionCompany =

descriptionCompany %>%

str_replace_all(remove_text, "") %>%

str_replace_all(company, '') %>%

str_trim()

)

})

# Filter out acquisitions

if (exclude_acquisitions) {

data_ycombinator <-

data_ycombinator %>%

filter(!isCompanyAcquired)

}

## Return only dead companies

if (only_defunct_companies) {

data_ycombinator <-

data_ycombinator %>%

filter(isCompanyDead)

}

## Specify verticals

if (!purrr::is_null(verticals)) {

glue::glue(

"Limiting training YC data to {length(verticals)} verticles:\n

{str_c(verticals, collapse =', ')}

"

) %>%

message()

data_ycombinator <-

data_ycombinator %>%

filter(verticalCompany %in% verticals)

}

if (!purrr::is_null(years)) {

glue::glue(

"Limiting training YC data to the following years:\n

{str_c(years, collapse =', ')}

"

) %>%

message()

data_ycombinator <-

data_ycombinator %>%

filter(yearYC %in% years)

}

if (!purrr::is_null(season)) {

glue::glue(

"Limiting training YC data to the following season:\n

{str_c(season, collapse =', ')}

"

) %>%

message()

data_ycombinator <-

data_ycombinator %>%

filter(nameSeason %in% season)

}

data_ycombinator

}Step 3 – Build text generating neural network

Now we are ready to build a function that uses text to generate text via a neural network.

I’ve coined this function kerasify_text.

I am not getting into the specifics of how neural networks work. For those who are curious and have a bit of time I recommend this video.

What we are going to do is build function that from a vector of text, mixed with a host of neural network parameters spits out machine learning driven text.

These parameters are the number of epochs, diversity, batch size, learning rate, maximum number of characters and maximum sentence length.

We are also going to provide a label for what the text output we are generating should be called and whether we want to see the results as the neural network works its magic.

The final result will be a data frame with the text and information about how the machine learning output was generated.

kerasify_text <-

function(input_text = text_blob,

output_column_name = "nameCompanyFake",

regurgitate_output = TRUE,

epochs = 5,

learning_rate = 0.01,

maximum_length = 3,

maximum_iterations = 50,

batch_size = 128,

diversities = c(0.2, 0.5, 1, 1.2),

maximum_chars = 500) {

if (!'input_text' %>% exists()) {

stop("Please enter input text")

}

### MODIFIED FROM https://keras.rstudio.com/articles/examples/lstm_text_generation.html

iterations <- 1:maximum_iterations

max_chars <- 1:maximum_chars

input_text <-

input_text %>%

str_c(collapse = "\n") %>%

tokenize_characters(strip_non_alphanum = FALSE, simplify = TRUE)

chars <-

input_text %>%

unique() %>%

sort()

glue::glue("Total characters for neural network: {length(chars)}") %>%

message()

# cut the input_text in semi-redundant sequences of maximum_length characters

dataset <-

map(seq(1, length(input_text) - maximum_length - 1, by = 3),

~ list(sentence = input_text[.x:(.x + maximum_length - 1)], next_char = input_text[.x + maximum_length]))

dataset <- transpose(dataset)

# vectorization

input_matrix <-

array(0, dim = c(length(dataset$sentence), maximum_length, length(chars)))

output_array <-

array(0, dim = c(length(dataset$sentence), length(chars)))

for (i in 1:length(dataset$sentence)) {

input_matrix[i, ,] <- sapply(chars, function(x) {

as.integer(x == dataset$sentence[[i]])

})

output_array[i,] <- as.integer(chars == dataset$next_char[[i]])

}

### Model Definition

model <-

keras_model_sequential()

model %>%

layer_lstm(batch_size, input_shape = c(maximum_length, length(chars))) %>%

layer_dense(length(chars)) %>%

layer_activation("softmax")

optimizer <-

optimizer_rmsprop(lr = learning_rate)

model %>%

compile(loss = "categorical_crossentropy",

optimizer = optimizer)

## Model Training

sample_mod <- function(preds, temperature = 1) {

preds <- log(preds) / temperature

exp_preds <- exp(preds)

preds <- exp_preds / sum(exp(preds))

rmultinom(1, 1, preds) %>%

as.integer() %>%

which.max()

}

all_data <-

data_frame()

for (iteration in iterations) {

glue::glue("iteration: {iteration} \n\n")

model %>%

fit(input_matrix,

output_array,

batch_size = batch_size,

epochs = epochs)

for (diversity in diversities) {

glue::glue("diversity: {diversity} \n\n")

start_index <-

sample(1:(length(input_text) - maximum_length), size = 1)

sentence <-

input_text[start_index:(start_index + maximum_length - 1)]

generated <- ""

for (i in max_chars) {

x <- sapply(chars, function(x) {

as.integer(x == sentence)

})

dim(x) <- c(1, dim(x))

preds <- predict(model, x)

next_index <- sample_mod(preds, diversity)

next_char <- chars[next_index]

generated <- str_c(generated, next_char, collapse = "")

sentence <- c(sentence[-1], next_char)

}

generated <- generated %>% str_to_upper()

## Spits out names

if (regurgitate_output) {

glue::glue("\n{cat(generated)}\n")

}

generated_names <-

str_split(generated, '\n') %>%

flatten_chr() %>%

str_to_upper() %>%

unique() %>% str_trim() %>% {

.[!. == '']

}

## Assing to DF

df <-

data_frame(UQ(output_column_name) := generated_names)

df <-

df %>%

mutate(idIteration = iteration,

idDiversity = diversity) %>%

dplyr::select(idIteration, idDiversity, everything())

all_data <-

all_data %>%

bind_rows(df)

}

}

## Return results

all_data

}Warning…

This function is extremely computationally burdensome.

Depending on the size of your text corpus, input parameters and computer processing power the function will vary widely in how long it takes to work.

Step 5 – Put it all together

We now have prepared the ingredients needed to generate our fake start ups.

Lets put them to use.

Message to those coding along

Even if you follow my exact parameters, your results will be different. So please don’t be alarmed.

Step 5A – Filter the YC data

The first thing we want to do is run the filter_yc_alumni function we built.

This will clean the company names and descriptions, removing companies that don’t have a description. For the purposes of today’s tutorial I also want to exclude companies that indicate they were acquired.

df_yc <-

df_yc %>%

filter_yc_alumni(exclude_acquisitions = TRUE, only_defunct_companies = FALSE)We now have our filtered data provides the text corpa needed for our neural network and our Markov model.

Step 5B – Run the neural net to generate fake start-up names

We are ready to generate the fake start-up names.

The key input for our function is the vector of real start up company names.

We can get this by using the dplyr::pull function on the nameCompany column. In machine learning speak this is going to be our training data from which our fake company names will be generated off of.

yc_companies <-

df_yc %>%

pull(nameCompany)Now that we have the input text it is time to run the function.

Before we can do that we need to decide on the other input parameters.

We are going to call the output nameCompanyFake since we are generating fake companies. I want to see the output as it is generated so I will leave that TRUE. I want the model to have a decent number of looks at the training data each go around which we can do by setting the number of epochs to 25. We don’t want any companies with more than 4 words in the name so we set the maximum length to 4. We also don’t want them to exceed 300 characters in length so we set that maximum chars parameter equal to 300. We will set the batch size equal to 140. We can use the default parameters for learning rate and diversity so we don’t have to include them in the function call. Lastly I want the model to run 50 times so we set the maximum iteration parameter equal to 50.

We are now ready to go but please be patient this is going to take a while!!

df_names <-

kerasify_text(

input_text = yc_companies,

output_column_name = "nameCompanyFake",

regurgitate_output = TRUE,

epochs = 25,

maximum_length = 4,

maximum_iterations = 50,

batch_size = 140,

maximum_chars = 300

)Once the neural network is done you should see a data frame with a few thousand results. We have a bit more work to do before we are done with the names though.

The only thing we want from the output are the fake company names.

We can do this by selecting that variable. There are likely be repeat names and we only want the unique names and so to return only unique names we will use the dplyr::distinct function.

Next we will add a variable that calculates the number of characters in the name.

We only want to include names that contain between 2 and 15 characters. Using the dplyr::filter and dplyr::between functions achieve this.

Finally, we need to add an ID column that allows us to join the company names with the company descriptions we wll soon generate.

df_cos <-

df_names %>%

select(nameCompanyFake) %>%

distinct() %>%

mutate(charName = nchar(nameCompanyFake)) %>%

filter(charName %>% between(2, 15)) %>%

mutate(idRow = 1:n()) %>%

dplyr::select(-c(charName))Step 5C – Generate fake start-up descriptions with our Markov model

Now we are ready to generate our fake descriptions.

Similar to what we did before we will pull in our input text variable, in this case the company descriptions.

company_descriptions <-

df_yc %>%

pull(descriptionCompany)Now we are ready to define the parameters and run the model.

We want to call the output dsecriptionCompanyFake. We will keep the Markov state to the default of 2. We want to limit the length of the sentence to no more than 400 characters and finally we will run the model so there is a description for each of the fake company names we generated by making the number of iterations equal to the number of rows in the company name data.

df_descriptions <-

markovify_text(

input_text = company_descriptions,

output_column_name = 'descriptionCompanyFake',

markov_state_size = 2,

maximum_sentence_length = 400,

iterations = nrow(df_cos)

)You should now see a data frame with the same number of descriptions as company names. This function already includes an ID column so we don’t need to add one.

Step 5D – Join everything together to create the final product

We now have data containing fake names and descriptions all that is left to do is combine them together.

To do this we will take the df_cos data frame and use the dplyr::left_join function to bind together df_descriptions data.

The final step is to filter out any NA company descriptions which we may or may not have.

all_data <-

df_cos %>%

left_join(df_descriptions) %>%

dplyr::select(-idRow) %>%

filter(!descriptionCompanyFake %>% is.na())Time to Explore

Now after all this hard work it is time to enjoy the fruits, or more appropriately, the cold-pressed juice pods of our labor 😃😎😎

We generated a few thousand companies, lets take a look at 10 random ones.

To do that we will use the `dplyr::sample_n`` function.

all_data %>%

sample_n(10) %>%

formattable::formattable()| nameCompanyFake | descriptionCompanyFake |

|---|---|

| CALLER | A SIMPLE, AND INTUITIVE VISUAL INSTRUCTION, BASED ON 56+ PHOTO PROPERTIES. |

| DEARDHEAR | BUILDS WORKFLOW & COLLABORATION SOFTWARE FOR SMALL AND MID-MARKET BUSINESSES. |

| R8VETURE HEALTH | PROVIDES NATIVE VIDEO ADVERTISING EXPERIENCE FOR KIDS, COUPLES AND ATTORNEYS. |

| STRICWITEA | ENABLES LOCAL GOVERNMENT TO ACCEPT AND CURATE DIGITAL CONTENT. |

| HIMY | IF YOUR STARTUP IS LOOKING FOR A SHARE OF THEIR TEMPORARY STAFFING NEEDS. |

| HYPART | WE’RE A B2B MARKETPLACE FOR INSURANCE SECURITIZATION. |

| LATT | TABLETS ON RESTAURANT TABLES, SO GUESTS CAN ORDER, PAY AND PLAY GAMES FROM THEIR SMARTPHONE IN LESS THAN 5 MINUTES. |

| AIYDLRMSS | FLOYD IS HEROKU FOR MACHINE LEARNING TO HELP YOU GET FEEDBACK AND ITERATE ON THEIR DESIGNS. |

| KIPPI | WE BUILD CROWDFUNDING SITES THAT LETS WOMEN RENT DRESSES FROM EACH OTHER. |

| TATT | CCG EMPOWERS CLINICIANS TO MAKE PR SCALE IN THE REAL WORLD. |

Take a look at that, 10 machine learning generated start-up ideas that could be the next billion dollar unicorn and probably are a better idea to me at least than Juicero’s pitch.

Now lets take a look at all 4507 machine learning generated start up ideas and make them available to the whole world for the rest of time.

Feel free to peruse, export and even use them for possible start-up ideas of your own. I just ask that you remember me if anything ever comes of the idea and drop me a line about how you encountered this post after you raise your first successful round of funding!

library(DT)

all_data %>%

datatable(

filter = 'top',

extensions = c('Responsive', 'Buttons'),

options = list(

dom = 'Bfrtip',

buttons = c('csv', 'excel', 'pdf', 'print'),

pageLength = 5,

autoWidth = TRUE,

lengthMenu = c(5, 25, 50, 100, 500)

)

)That wraps up the second portion of this post. I encourage you to play around a bit with these functions. Change around the parameters and try filtering the input data to focus on specific verticals or company outcomes and see how the results differ.

Parting notes

First, I want to thank Antonio for inspiring this post.

Your humor and wit lit the fire I needed to finally publicly showcase how to use my packages together and most importantly take on this advanced machine learning task.

I also want to thank the RStudio team.

Their keras and reticulate packages are absolutely incredible and make it seamless to both integrate Python with R. These packages handle all the machine learning heavy lifting with ease and grace

Finally I want to thank everyone reading this.

I hope you found it engaging and useful. There aren’t many tutorials on integrating Python with R or generating text from machine learning functions. For those that were able to complete the second part I hope that I did an adequate job of trying to fill that void in an insightful and engaging manner.

I also encourage you to use these functions with other text corupa that interest you.

You can impress your friends with neural network generated rap music or a markovifyed version of Stephen A. Smith’s twitter.

Use your imagination and machine learning can take you to some fun and interesting places.

Until next time…

Alex Bresler

Alex Bresler